Project Description

AI technologies now play various communicator roles, from content producers (e.g., automated news generation) to interpersonal interlocutors (e.g., voice assistants). However, the “black-box” nature of AI systems has raised serious concerns, especially when they are used to automate important decisions in domains such as health, finance, and criminal justice. In the field of Explainable AI (XAI), researchers have strived to identify technical solutions to improve the transparency of AI decisions. Focusing on the potential of XAI for raising awareness of algorithmic bias, this study presents one of the earliest empirical efforts on examining whether the state-of-the-art XAI approaches can effectively improve user understanding of AI decisions and help users identify potential biases in the AI systems.

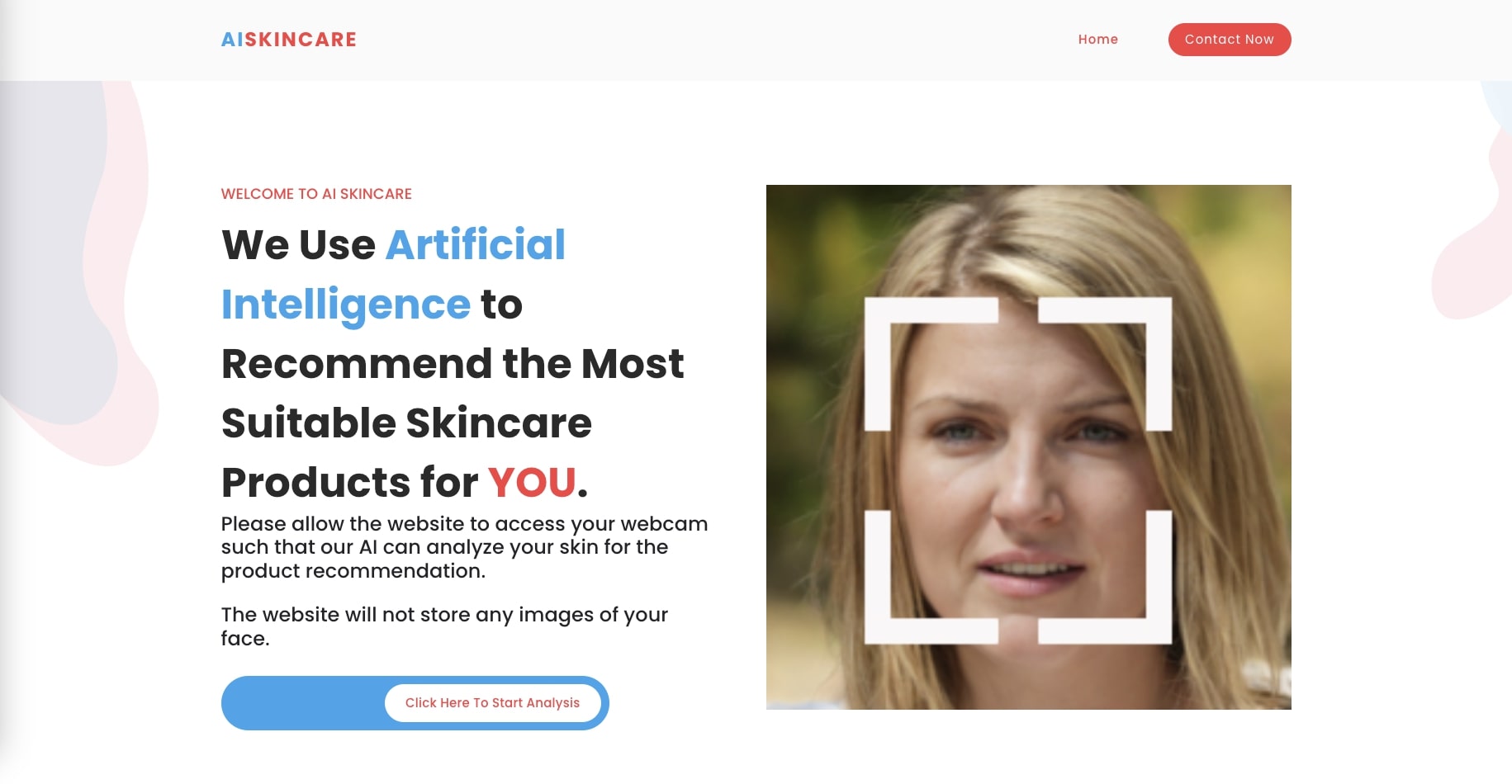

We examined the popular “explanation by example” XAI approach, where users receive explanatory examples resembling their input. As this XAI approach allows users to gauge the congruence between these examples and their circumstances, perceived incongruence can evoke perceptions of unfairness and exclusion, prompting users not to put blind trust in the system and raising awareness of algorithmic bias stemming from non-inclusive datasets. The results further highlight the moderating role of users’ prior experience with discrimination. This study joins the ongoing call for more interdisciplinary research to provide human-centered guidance on developing and evaluating XAI approaches for mitigating algorithmic bias.